Lab 06: SAC

In the lab, you need to implement SAC to solve the task of Pendulum-v0 .

Understand SAC

Read the document from OpenAI spinning up HERE

Focus on understanding the twin Q networks and entropy regularization. Feel free to skip the action squashing part, which we have a similar element in PPO .

Start Code

Adapt code from both DDPG (because it is off-policy and uses replay buffer) and PPO (because it uses stochastic policy).

What to change

Stochastic Policy

-

Use

DDPGcode as a base and migrate the related code fromPPOto change the deterministic policy to stochastic policy. - Keep the neural networks structure similar to

DDPG. Only change the last layer to output \(\mu\) and \(\theta\) (remove theTanh). - Remove the target network for actor

target_act_networkto be consistent with the original paper setup.

Twin Q networks

-

Rename the original

q_netasq1_net,target_q_netastarget_q1_net. -

Create another Q network as

q2_net. Feel free to usedeepcopy

self.q1_net = nn.Sequential(

nn.Linear(n_state + n_action, 400),

nn.ReLU(),

nn.Linear(400, 300),

nn.ReLU(),

nn.Linear(300, 1)

)

self.q2_net = nn.Sequential(

nn.Linear(n_state + n_action, 400),

nn.ReLU(),

nn.Linear(400, 300),

nn.ReLU(),

nn.Linear(300, 1)

)

self.q1_net.to(device)

self.target_q1_net = copy.deepcopy(self.q1_net)

self.target_q1_net.to(device)

self.q2_net.to(device)

self.target_q2_net = copy.deepcopy(self.q2_net)

self.target_q2_net.to(device)

You also need to create dedicate optimizers for q2_net .

- When calculating the

logprobloss from the actor network, refer to the similar part inPPO. Carefully follow the pseudo code in the spinning up document to make sure target networks are used in correct place.

A new kind of Q

As one of the primary contributions of the paper, SAC adapts the soft Q learning that incooperates entropy into the Q function. More mathematic details can be found in the paper or the OpenAI tutorial.

If you have the twin Q networks and stochastic policy network set up, the TD target of soft Q can be calculated as

logprob_ = dist.log_prob(act_).squeeze()

# Calculate y

q_input = torch.cat(

[next_obs, act_], axis=1)

y1 = reward + self.gamma * (1 - done) * \

self.target_q1_net(q_input).squeeze()

y2 = reward + self.gamma * (1 - done) * \

self.target_q2_net(q_input).squeeze()

y = torch.min(y1, y2) - self.alpha*logprob_

Note that logprob_ here need to be calculated as the way we did in PPO. And

self.alpha is a hyper-parameter called temperature factor. Feel free to set it

to 0.2 for now. You will need to tweak this parameter later.

Reparameterization trick for training act_net

Here is the implementation you can adapt to do reparameterization in Pytorch:

dist = Normal(mu, var)

act_ = dist.rsample()

logprob_ = dist.log_prob(act_).squeeze()

Here, mu and var are something from the act_net. By using rsample() instead of sample(), we are able to make act_ carry gradients from act_net.

To calculate the loss of act_net, you can do

logprob_ = dist.log_prob(act_).squeeze()

loss_act = (self.alpha*logprob_ - y).mean()

where y is the minimum Q from q1_net and q2_net.

Expected Performance

Environment: Pendulum-v1

Target: Average reward > -200 over 100 test episodes by episode 300

Typical Training Progress:

- Episodes 0-50: Reward -1000 to -500 (exploration phase)

- Episodes 50-150: Reward -500 to -200 (learning phase)

- Episodes 150-300: Reward -200 to -150 (convergence)

- Episodes 300+: Stable performance around -150 to -250

Comparison to A2C (Lab 05):

- SAC should be more stable (less variance in training curve)

- SAC should converge faster (fewer episodes needed)

- SAC should achieve better final performance (-150 to -200 vs -200 to -300)

Grading Criteria (15 pts for performance):

- Full credit: Reward > -200 by episode 300

- Partial credit: Reward > -300 by episode 500 (meets minimum)

- Minimal credit: Reward > -500 (shows some learning)

Alpha (Temperature) Impact:

- α = 0.0: No entropy bonus (equivalent to standard actor-critic, less exploration)

- α = 0.2: Balanced exploration and exploitation (recommended)

- α = 0.5: High exploration (slower convergence but potentially better final performance)

- You should observe these trade-offs in your experiments

Troubleshooting: If SAC performs worse than A2C:

- Check that both Q-networks are updating

- Verify entropy term is in the Q-target:

y = min(Q1, Q2) - α*log π - Ensure target networks are syncing correctly

- Confirm reparameterization trick uses

rsample()notsample()

Write Report

- Run the program with three

self.alphasettings:0.0,0.2,0.5. Record the training log from the three setup and plot the reward curves. - Write your observation/insight on the impact of

self.alpha. - Given several contributions of SAC (e.g., twin Q nets, soft Q learning), which part do you think have the most significant impact on the performance?

Deliverables and Rubrics

Overall, you need to complete the environment installation and be able to run the demo code. You need to submit:

- (70 pts) A PDF from running the your code in jupyter notebook with accuracy reported in the program output.

- (15 pts) Performance: The average of reward reaches above -300 within 500 trials.

- (15 pts) Reasonable answers to the questions (backed up with experiment results)

Debuging Tips

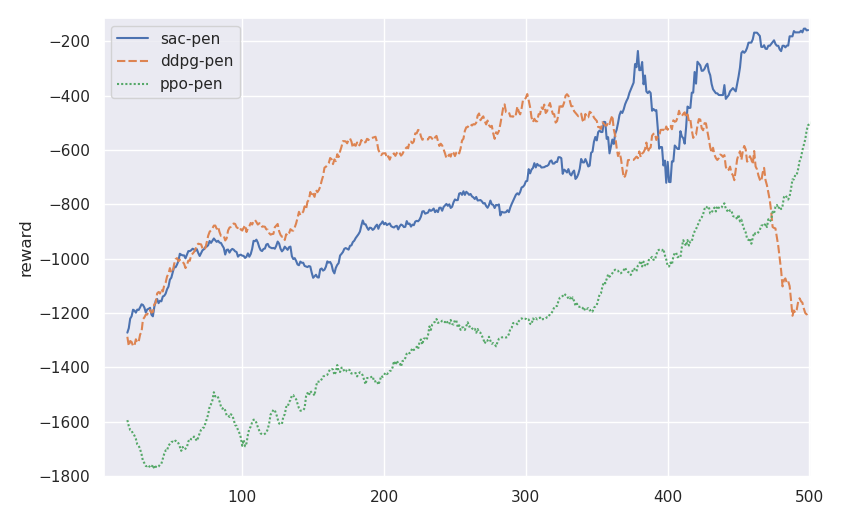

As a reference, here is my training curves comparing with DDPG and PPO: