Lab 04: DDPG

Understand DDPG

Read the document from OpenAI spinning up HERE

Write Code

- Adapt the DQN code from previous demo

- Solve the

MountainCarContinuous-v0env = gym.make("MountainCarContinuous-v0", render_mode="rgb_array") n_state = int(np.prod(env.observation_space.shape)) n_action = int(np.prod(env.action_space.shape)) print("# of state", n_state) print("# of action", n_action)

What you need to change:

Add another neural nets (and its target) as the policy making function, a.k.a., actor. It takes obs as input and outputs an action, which in this case should be an np.array.

- Incorporate the range of action

self.act_limintoact_net

Change the q_net to take [state, act] as input and outputs a single value as the Q value.

- This include changing all the places you called

self.q_net. - The way to combine

stateandactis like thisq_input = torch.cat( [next_obs, self.act_lim*self.target_act_net(next_obs)], axis=1) y = reward + self.gamma * (1 - done) * \ self.target_q_net(q_input).squeeze()

Change the random policy behavior

- Instead of doing the greedy epsilon, just simply add a random noise signal like:

act += self.noise*np.random.randn(n_action)where

self.noiseis a hyperparameter you can tweak.

Over the course of training, feel free to decay this noise to yield less random “policy”:

if agent.noise > 0.005:

agent.noise -= (1/200)

Note: Decay this noise after every trial/episode, not every step.

Change act to FloatTensor in the ReplayBuffer.

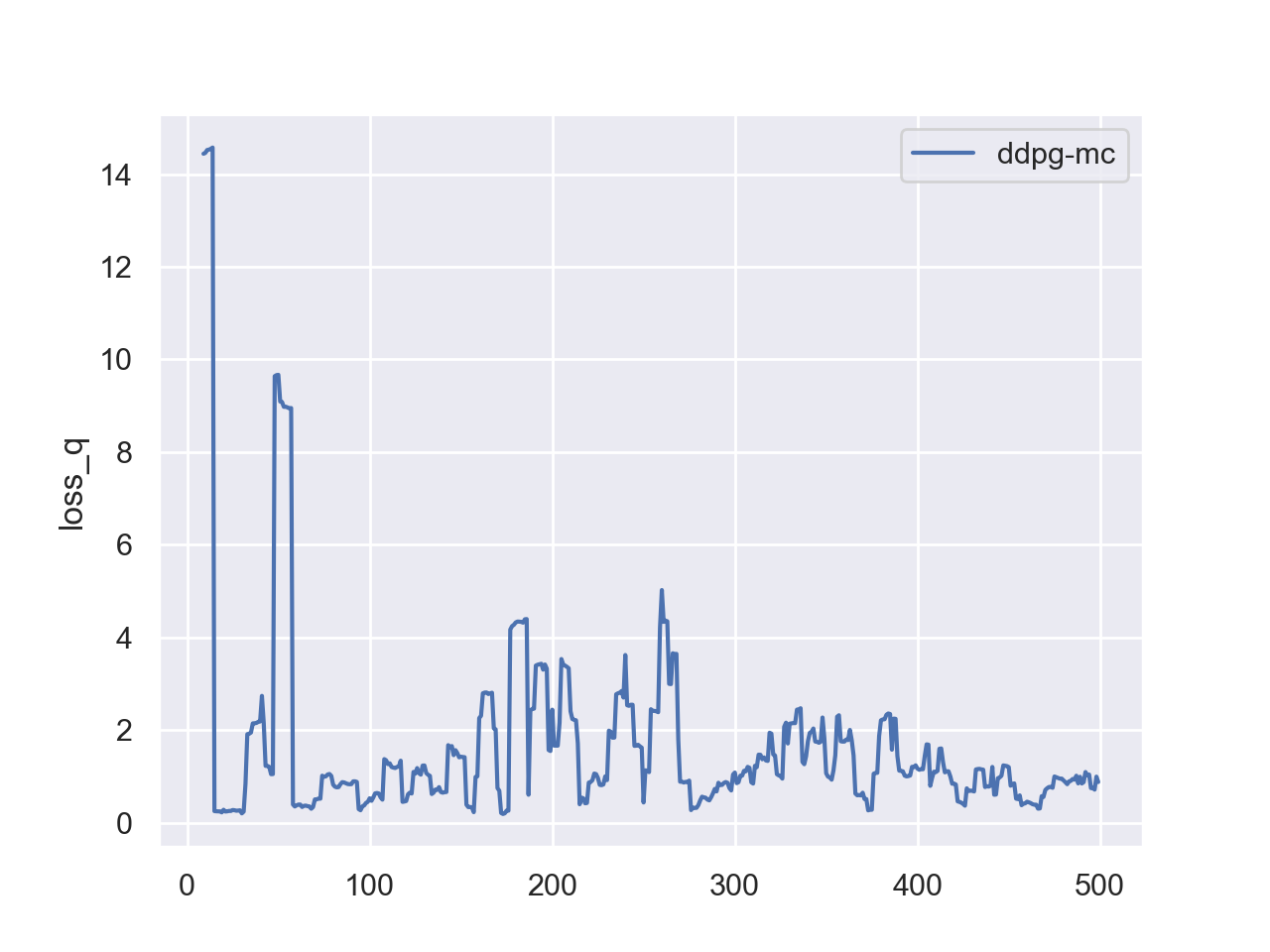

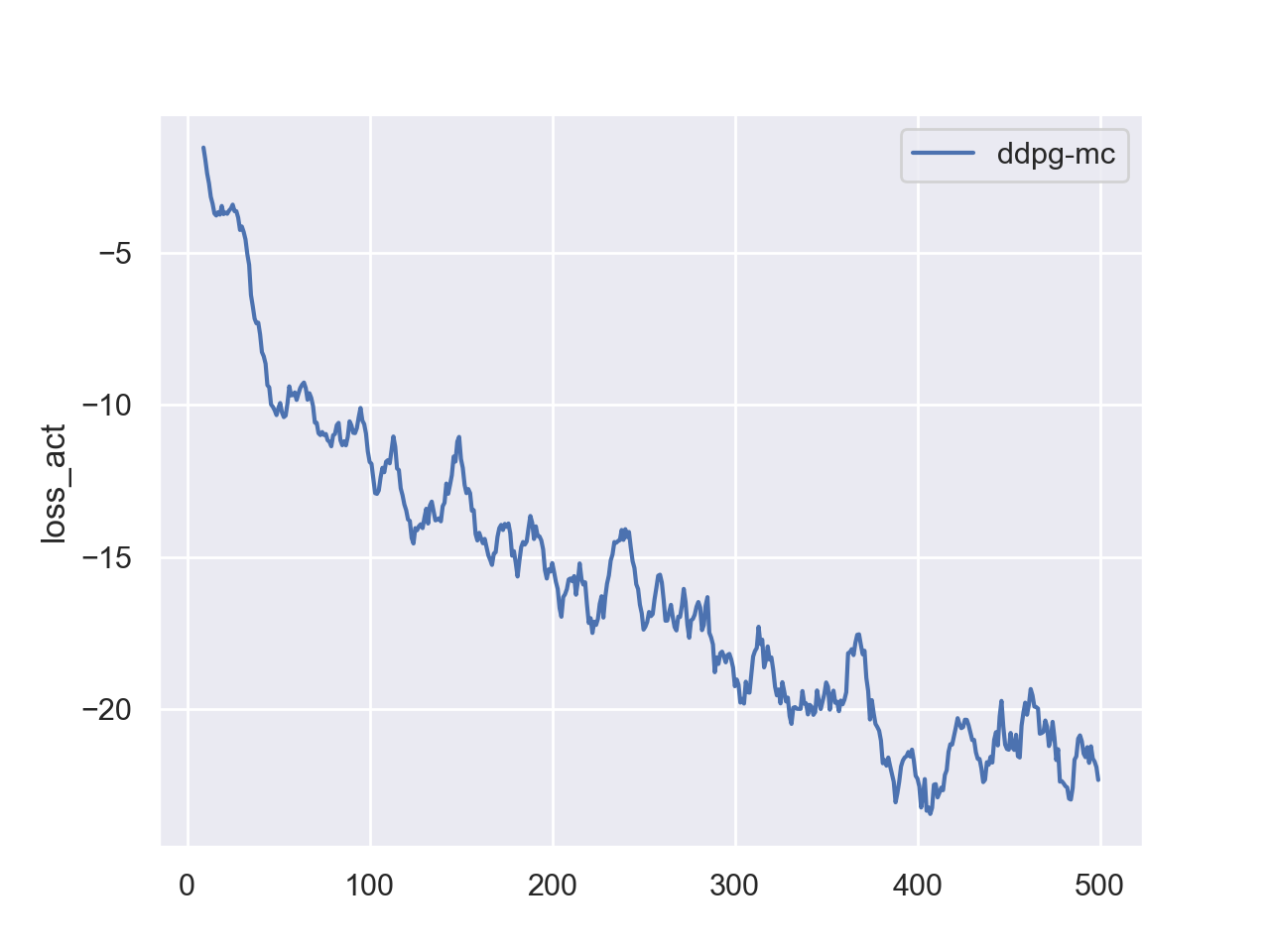

Return two kinds of loss for inspection, loss_q and loss_act

Writing Report

Questions to answer:

- How does the setting of noise scale

self.noiseimpact the training and performance?- Preferably, try to run multiple experiments with different

self.noisesettings and plot the results.

- Preferably, try to run multiple experiments with different

- (Open-Ended question) What is the major downside of DDPG according to your understanding/observation?

Deliverables and Rubrics

- (60 pts) PDF (exported from jupyter notebook) and python code.

- The report should include at least reward curve over each training iteration (i.e., episode).

- (15 pts) The result shows your implementation achieved desirable result.

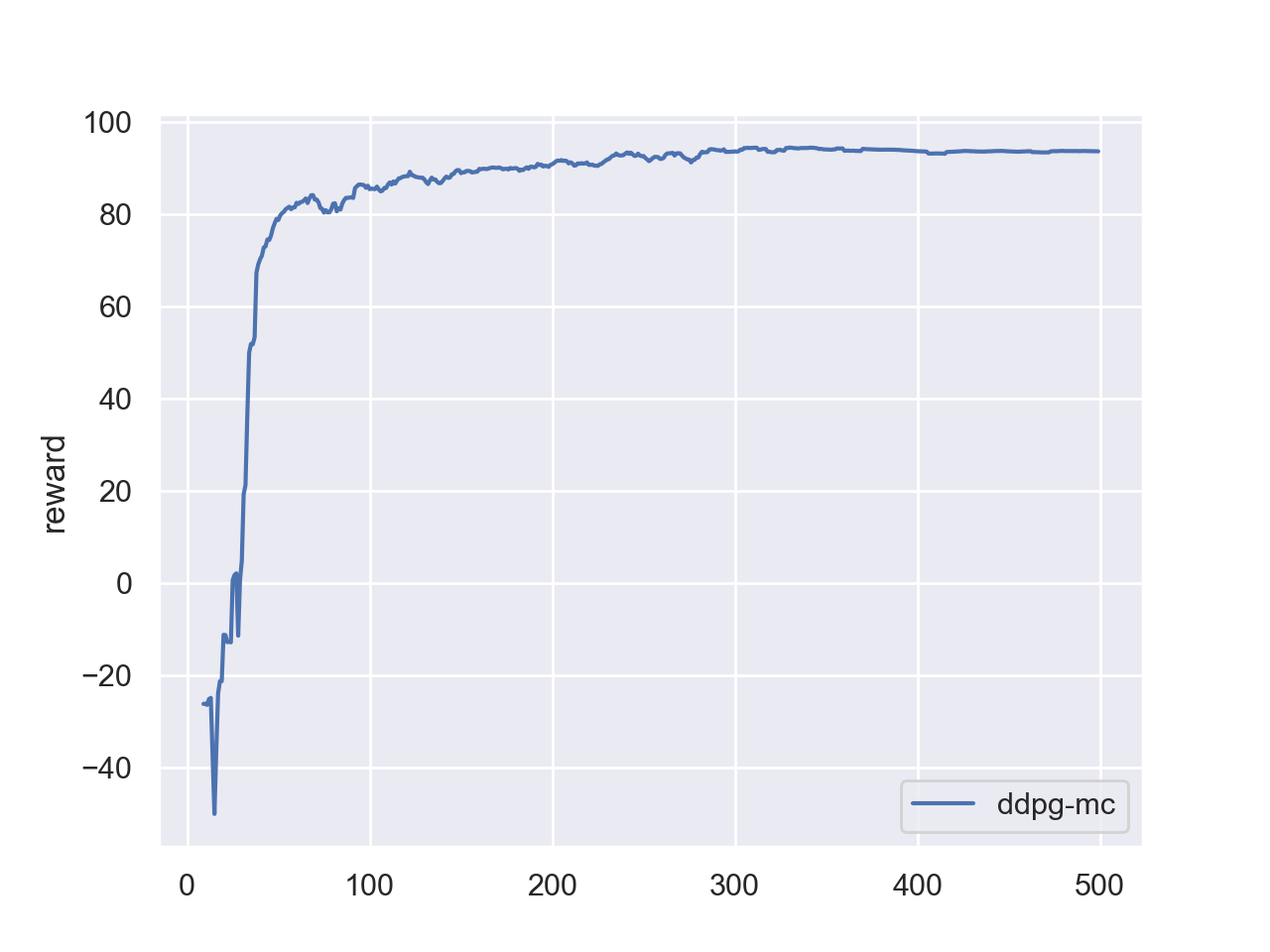

- If your code is correct, the mean reward should be above 80 after 300 episodes. (Check my plots below)

- (15 pts) Most likely, your code can suffer from some unstableness, meaning it can learn effectively and quickly sometimes, but it can also get stuck at the initial stage and never learn. Try different random seeds to reproduce the results. In those bad cases, your

loss_actremains positive and the agent doesn’t improve, it usually means the replay buffer only contains bad experiences from unsuccessful exploration. What are some possible ways to mitigate this issue? Try to implement one of those ways and show the result. - (10 pts) Reasonable answers to the questions.

Debugging Tips

- Check the loss:

loss_qshould be positive,loss_actshould be negative . Positiveloss_actusually indicates sparse or non successful trials had been heavily used for training.

Here are my neural nets:

self.q_net = nn.Sequential(

nn.Linear(n_state + n_action, 400),

nn.ReLU(),

nn.Linear(400, 300),

nn.ReLU(),

nn.Linear(300, 1)

)

self.act_net = nn.Sequential(

nn.Linear(n_state, 400),

nn.ReLU(),

nn.Linear(400, 300),

nn.ReLU(),

nn.Linear(300, n_action),

nn.Tanh()

)

I initialize the exploration noise to 2.

Here is my main train loop. Feel free to copy it: (Change the agent to your own policy)

loss_q_list, loss_act_list, reward_list = [], [], []

update_freq = 10

n_step = 0

loss_q, loss_act = 0, 0

for i in tqdm(range(500)):

obs, rew = env.reset(), 0

while True:

act = agent(obs)

next_obs, reward, done, _ = env.step(act)

rew += reward

n_step += 1

agent.replaybuff.add(obs, act, reward, next_obs, done)

obs = next_obs

if len(agent.replaybuff) > 1e3 and n_step % update_freq == 0:

loss_q, loss_act = agent.update()

if done:

# if reward > 90:

# print("wow")

break

if i > 0 and i % 50 == 0:

run_episode(env, agent, True)[2]

print("itr:({:>5d}) loss_q:{:>3.4f} loss_act:{:>3.4f} reward:{:>3.1f}".format(

i, np.mean(loss_q_list[-50:]),

np.mean(loss_act_list[-50:]),

np.mean(reward_list[-50:])))

if agent.noise > 0.005:

agent.noise -= (1/200)

loss_q_list.append(loss_q), loss_act_list.append(

loss_act), reward_list.append(rew)

My training curves are like: